Rethinking Your Data Science Toolkit: Insights for Leaders

Written on

Chapter 1: Understanding the Evolving Data Science Landscape

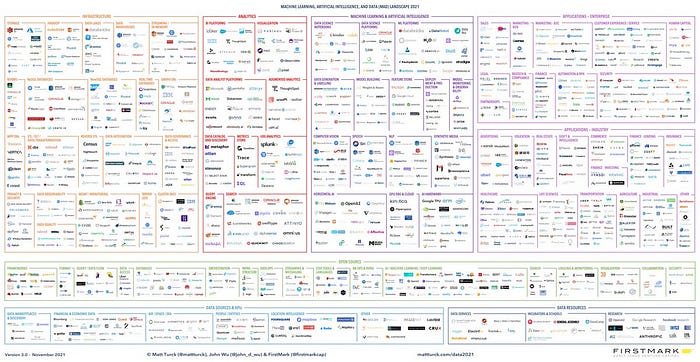

In previous discussions, I explored the progression of the data science technology stack and provided insights on how to operationalize machine learning effectively. While it would be ideal to have a single tool that addresses all challenges within data science, such an expectation is unrealistic.

As I embark on the journey of building a machine learning team from the ground up, I find it crucial to reassess how we choose tools and structures for machine learning and data science. My goal is to support new leaders, data scientists, and machine learning engineers in collaborating to enhance our workflows and overall efficiency.

Hamal Husain delivered an enlightening presentation on evaluating MLOps tools at Stanford last year, which I found particularly beneficial. Today, I'd like to share some of the key takeaways from his talk.

Section 1.2: Identifying Workflow Frictions

No tool is without its drawbacks. For instance, consider the comparison between PyTorch and TensorFlow (TFX), or Looker and Tableau, or even GCP and AWS. Hamel provided a pertinent example regarding TFX: "While the convenience of auto-EDA is appealing, basic operations that Pandas easily handles can turn into challenges with TFX." Such limitations can hinder effective feature engineering.

It's vital to recognize friction points in your workflow when utilizing various tools. If your results are lacking, it could be that your current tool is not meeting your needs.

Chapter 2: Cognitive Load and Boilerplate Challenges

As François Chollet, the creator of Keras, aptly stated, "If the cognitive load of a workflow is sufficiently low, a user should navigate it from memory after a few repetitions." Despite having a background in SQL, some business stakeholders I've worked with have struggled with LookerML, often giving up after a few attempts. Below is an illustration contrasting average order value (AOV) calculations in LookerML and BigQuery SQL. The effectiveness of one over the other truly depends on the specific use case.

Section 2.1: Scalability Considerations

Scalability presents a multifaceted challenge. Rapidly expanding startups often find themselves in a constant state of catching up. As a company grows, the tools must also evolve to meet its changing needs. Different stages of development require different tooling strategies. It’s wise to anticipate future needs over the next six months, one to three years, and beyond. Invest in infrastructure that supports both short-term and long-term goals.

On the flip side, as Donald Knuth wisely remarked, "Premature optimization is the root of all evil." I would hesitate to adopt a complex framework like TFX when dealing with small datasets. Should I prepare for potential future growth? Perhaps.

In the immediate term, if working with relatively simple machine learning pipelines, alternatives like PyTorch may be more suitable. However, as the complexity and size of your data increase, TFX can become an invaluable asset for managing technical debt and ensuring reliability.

With the rise of tools like ChatGPT, the data science landscape is poised for significant shifts in the coming years. It’s essential to continuously evaluate your ML stack and monitor industry trends while keeping an eye on your organization’s growth.

Section 2.2: Timing Your Tool Changes

Determining the right moment for a tool change is an art of technical leadership. In an upcoming post, I’ll delve deeper into navigating this process effectively.

Chapter 3: Avoiding Tool Zealotry

Falling into the trap of tool zealotry is easy. The allure of using tools from established tech giants, like Google, can be tempting, as can adopting industry-leading solutions like Tableau for data visualization. However, we must be mindful of potential frictions that may arise from over-reliance on these tools.

Hamel cautioned, "Don't be a tool zealot." Forming a data science team requires careful consideration of tool selection. Becoming overly attached to a specific tool can limit your problem-solving capabilities, introduce biases in task selection, hinder the recruitment of diverse talent, and create blind spots in your approach.

As Hamel suggested, regularly experiment with new tools, and maintain an open mind, even when time is limited.

Thank you for reading my newsletter! You can connect with me on LinkedIn or Twitter @Angelina_Magr.

Note: There are various perspectives to consider when addressing interview questions. This newsletter aims to provide quick insights to encourage further thought and research as needed.

Chapter 4: Expanding Knowledge through Video Resources

The first video, Stacks: A Data Structure Deep Dive! 🥞 - YouTube, offers an in-depth exploration of stacks as a data structure, providing valuable insights for data scientists.

The second video, Stack Data Structure Tutorial – Solve Coding Challenges - YouTube, presents a practical guide to using stacks to tackle coding challenges effectively.